This is part two in a series of articles detailing load balancing for Exchange using the KEMP virtual load balancer (VLB). In this article we will be configuring the general settings for the VLB before we configure specific settings for L4 or L7 load balancing.

The other articles in this series are:

Read more ...

The other articles in this series are:

- Introduction to Load Balancing for Exchange and Other Workloads

- How to Configure General Settings on the KEMP Virtual LoadMaster (this article)

- How to Configure an L7 KEMP Virtual Load Balancer (VLB) for Exchange 2013

- How to Configure an L4 KEMP Virtual Load Balancer (VLB) for Exchange 2013

- How to Restrict Exchange Admin Center Access From the Internet Using KEMP VLB

In the previous article I gave a brief overview of some of the fundamentals of load balancing and described how to download and install a free trial of the KEMP Virtual LoadMaster for your home lab. Now we will configure the general settings using the web interface.

Begin by logging into the VLB management interface from a web browser with the password you configured earlier. Remember the admin username is bal.

Begin by logging into the VLB management interface from a web browser with the password you configured earlier. Remember the admin username is bal.

System Configuration

Click System Configuration on the left to expand these options. Under Interfaces you will see that the VLB has two NICs, eth0 and eth1. Since we are configuring a one-armed load balancer only eth0 has an IP address, which it got from DHCP. This is the IP address used for incoming traffic that will be load balanced. It is also currently used as the management IP. We will not be using eth1, so that IP is blank.

You will want to change the IP address for eth0 to a static IP. Enter the static IP address in CIDR format (i.e, 192.168.1.60/24) and click the Set Address button. After confirming the change, your browser will be redirected to the new IP address.

You'll notice that the link speed is set to automatic and it shows the current speed and duplex. You have the option to adjust the MTU (1500 is correct for most networks) and you can configure a VLAN if required.

Expand Local DNS Configuration. Here you can set a new hostname for the VLB if you wish (the default name is lb100). Click DNS Configuration to set your DNS server IP(s) and your DNS search domains.

Under Route Management. confirm that the default gateway IP address is correct. If you need to change it remember to click the Set IPv4 Default Gateway button.

Expand System Administration. Here is where you can change your password, update the KEMP LoadMaster license, shutdown or restart the VLB, update the LoadMaster software, and backup or restore the configuration.

Click Date/Time to enter the NTP host(s) to use for accurate time. I recommend using a local Domain Controller and/or pool.ntp.org. You can enter multiple NTP server names or IPs separated by spaces. Click the Set NTP Host button to save the configuration. Then set your local timezone and click the Set TimeZone button to save it.

Expand Miscellaneous Options and click Remote Access. Change the port used for Allow Web Administrative Access from port 443 to a custom port, such as 8443. This will allow you to access the LoadMaster web UI using a URL such as https://192.168.1.60:8443. If you change the UI port, you will be able to load balance SSL port 443 traffic using the same IP, otherwise you will need to configure additional IP address to load balance the same port. Remember to click the Set Port button to save the change. You will need to restart the LoadMaster to affect the port change. Do so under System Administration > System Reboot > Reboot. Once it restarts access the web UI using the new URL:port and login.

Expand System Configuration > Miscellaneous Options > L7 Configuration. Select X-Forward-For for the Additional L7 Header field. This will configure the VLB to forward the client's original IP address to the real server so it can be logged.

Next, change the 100-Continue Handling setting to RFC-7231 Compliant. I found the default value of RFC-2616 Compliant prevents MRS connections in hybrid scenarios with Office 365. Thanks to Brian Reid's article that lead me to this solution.

Configure a value for Least Connections Slow Start and click the Set Slow Start button. This is the number of seconds that the LoadMaster will throttle connections after a node comes online. The default value is 0, which means no throttling. Slow Start prevents the load balancer from overloading a node that comes back online because it has no current connections.

Expand System Configuration > Miscellaneous Options > L7 Configuration. Select X-Forward-For for the Additional L7 Header field. This will configure the VLB to forward the client's original IP address to the real server so it can be logged.

Next, change the 100-Continue Handling setting to RFC-7231 Compliant. I found the default value of RFC-2616 Compliant prevents MRS connections in hybrid scenarios with Office 365. Thanks to Brian Reid's article that lead me to this solution.

Configure a value for Least Connections Slow Start and click the Set Slow Start button. This is the number of seconds that the LoadMaster will throttle connections after a node comes online. The default value is 0, which means no throttling. Slow Start prevents the load balancer from overloading a node that comes back online because it has no current connections.

Certificates

If you plan to do SSL offloading or SSL bridging you will need to install the endpoint's SSL certificate on the load balancer. As described in the first part of this series, with this configuration client connections terminate at the load balancer. The load balancer then sends traffic to the real servers as HTTP (offloading) or re-encrypts the traffic to the real servers (bridging).

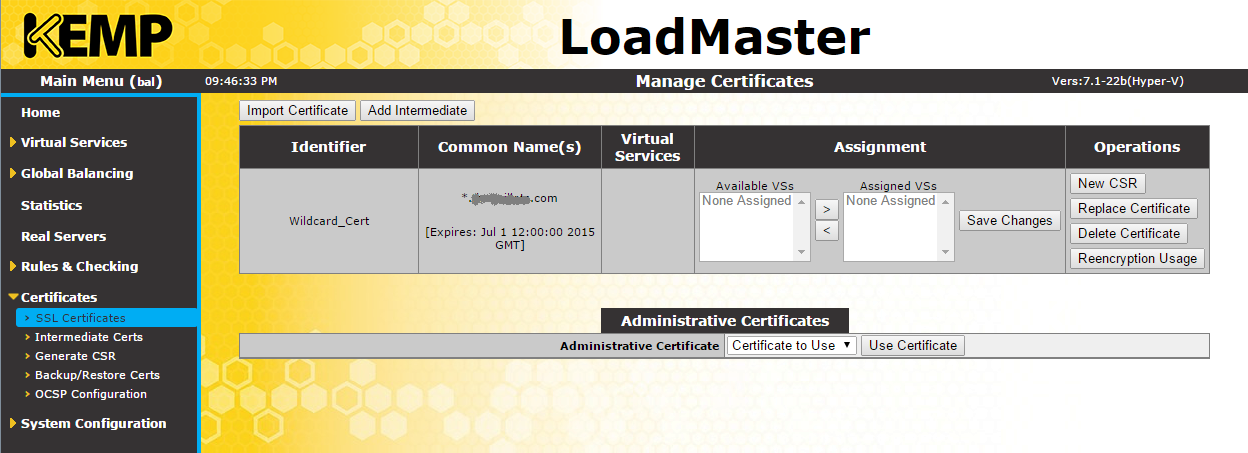

To install an SSL certificate on the VLB click Certificates > SSL Certificates. Under Manage Certificates click the Import Certificate button. Click the Choose File button to browse for the certificate file. Most times this is a PFX file which includes the certificate and private key. Enter the password for the PFX file in the Pass Phrase field and enter a useful Certificate Identifier.

Click Save to import the SSL certificate. You will now see that the SSL certificate is installed.

Almost all third-party trusted CAs use intermediate CAs to issue their certificates. You should install these intermediate certs on the load balancer, too. Click the Add Intermediate button on SSL Certificates.Click the Choose File button and browse for the intermediate CA cert file(s) to install. These certs need to be .cer or .pem files. Once they are installed you will see see them under Certificates > Intermediate Certs.

That does it for configuring general settings. In the next article I'll cover how to configure layer 7 load balancing for Exchange 2013.